Other Projects

XR/VR experiments, collaborations, and technical demos.

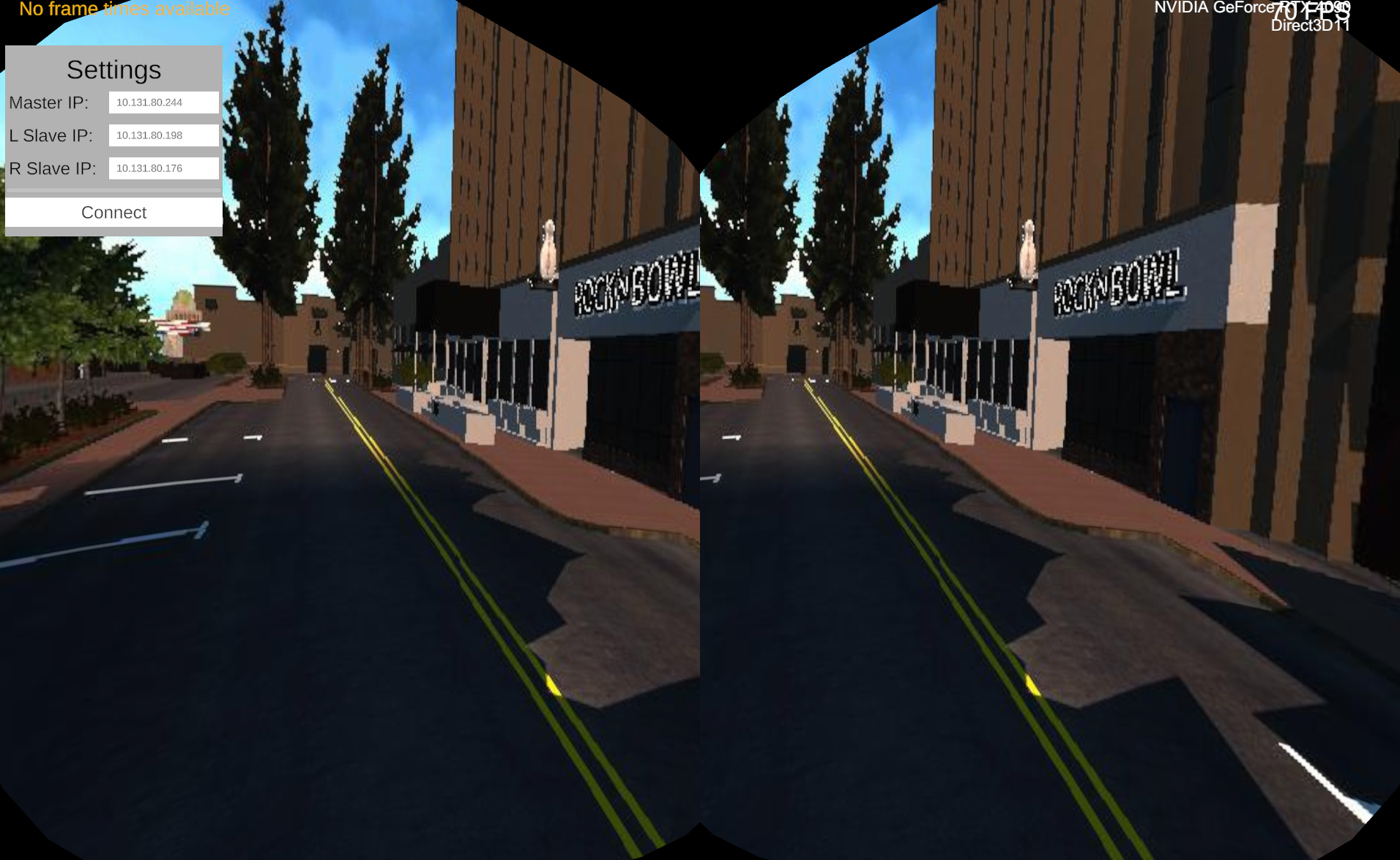

SFG-VR: Distributed VR Renderer

A distributed VR rendering system that offloads visual computation to two networked PCs.

The Meta Quest (master) sends headset transforms to slave PCs, which render separate halves

of the view. Their outputs are stitched in real time and displayed in VR.

This is an extension of the SFG architecture

from ATC’24. It features Unity + WebRTC networking, stereo occlusion rendering, and live UI-based IP config.

View on GitHub →

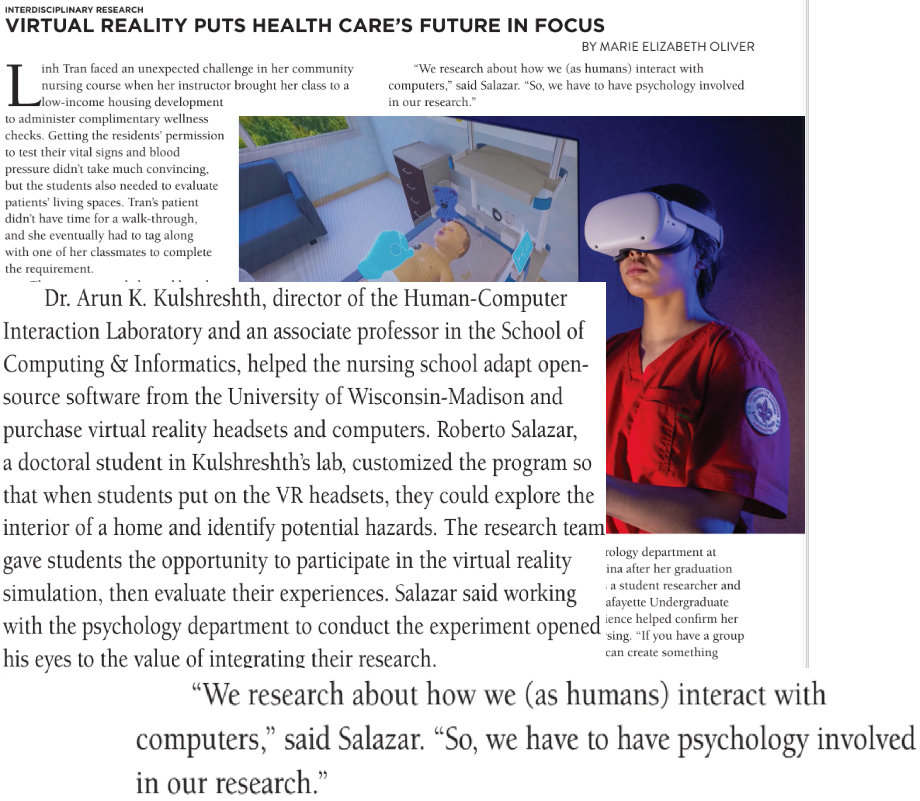

VR Nursing Simulator Featured in La Louisiane Magazine

Our interdisciplinary research on the VR Nursing Visit Simulator was spotlighted in the Spring 2024 issue of La Louisiane, UL Lafayette’s official magazine.

The article, titled "Virtual Reality Puts Health Care’s Future in Focus", covers how students and faculty from Nursing, Psychology, and Computer Science collaborated to simulate home visits and improve nursing education through VR.

Roberto Salazar helped adapt the system using Unity and Meta Quest to allow immersive, interactive home safety evaluations.

📖 Read the article in La Louisiane →

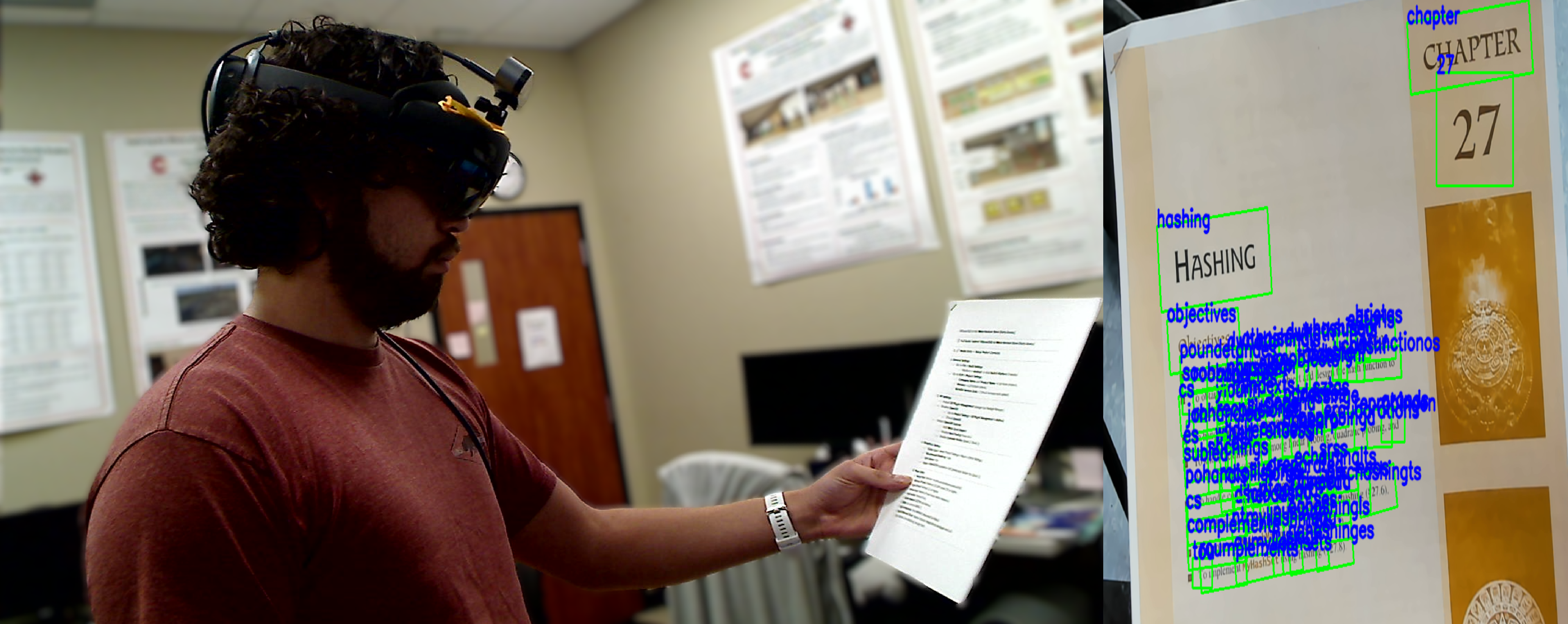

AR Motivation for Reading (HoloLens + GPT)

This HoloLens 2 prototype uses AR to boost motivation during difficult readings. A mounted external webcam enhances document capture, and OCR is applied in real time to detect and extract text from the user’s view.

The recognized text is corrected and passed to GPT-4 to generate voice-based explanations, motivational insights, or fun facts. It adapts dynamically based on the user's educational level—from elementary to PhD.

Future versions aim to reduce cognitive load by integrating non-intrusive motivational feedback like memes, gifs, or visual highlights that enrich the reading experience without distraction.

3D Scan of the LITE Center

A detailed photogrammetry model of the LITE building in Lafayette, LA, created using a DJI Mini 2 drone and RealityCapture.

This project explores drone-based 3D scanning for digital preservation and integration into virtual environments.

Tools: DJI Mini 2, RealityCapture

Location: Louisiana Immersive Technologies Enterprise (LITE), Lafayette, LA

#Photogrammetry #RealityCapture #DroneMapping

Urgent, Hard Problems I Solve Fast

I help labs and startups build functional AI/VR prototypes fast.

I turn ideas into Unity/GPT demos in days, not months. Ideal for MVPs and pilots.

GPT apps that automate domain tasks like summarization or planning.

Immersive training apps with feedback and logging for safety/health.

I help labs quickly build what they proposed in grant submissions.

Need something fast? Let’s connect.